Introduction

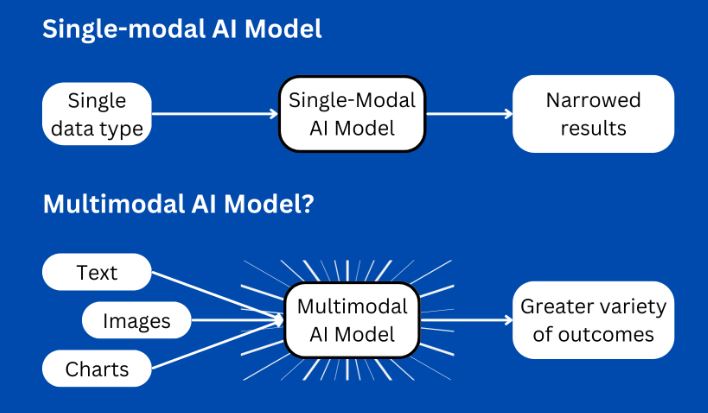

Artificial Intelligence (AI) is evolving rapidly, and Multimodal AI is leading the charge. Unlike traditional AI models that process just text or images, multimodal AI combines multiple data types, like text, audio, photos, and video, to make smarter decisions.

What is Multimodal AI?

Imagine an AI that doesn’t just read text or look at pictures, but can do both simultaneously, just like humans do. Additionally, that’s multimodal AI in a nutshell. It combines different types of information to understand the world more like we do.

Why this matters:

- Firstly, Fewer mistakes (two heads are better than one)

- Secondly, more natural interactions (like chatting with a real person)

- Then, works in more situations (from hospitals to self-driving cars)

Multimodal AI: How It Works (Without the Tech Jargon)

Additionally, think of multimodal AI like teaching a child:

- It looks at everything – reads words, sees pictures, hears sounds

- Connects the dots – learns that “dog” goes with a picture of a dog

- Gets smarter over time – Then, the more it sees, the better it understands

Multimodal AI: How to Use It (Even If You’re Not a Tech Expert)

Step 1: Pick Your Project

Additionally, what problem do you want to solve? Some easy starters:

- A shop assistant who understands photos and questions

- A safety system that watches and listens at the same time

Step 2: Use Ready-Made Tools

Moreover, you don’t need to build from scratch. Try:

- Google’s Lens (for pictures + text)

- OpenAI’s tools (for writing + images)

- Then, Amazon’s Alexa (for voice + smart home control)

Step 3: Teach It Your Way

Furthermore, show it examples of what you want it to learn. The more examples, the better it gets.

Step 4: Try It Out

Then, start small, see how it works, and improve as you go.

If you want to read How to Use Bing Chat, Click Here

Multimodal AI: Why Businesses Love This

Customer service that understands you

Doctors are getting help analyzing scans and records

Stores that can find products from photos

Then, safer workplaces with smart monitoring

Common Hurdles (And Easy Solutions)

Problem: It needs lots of examples

Fix: Start with free sample data online

Problem: Computers can run slowly

Fix: Use cloud services that handle the hard work

Problem: Might learn biases

Fix: Regularly check that its decisions are fair

What’s Coming Next

- Firstly, AI that can feel textures or smells (like for food or product testing)

- Secondly, instant translation for videos with speech and text

- Then, movie-making AI that writes scripts and creates visuals

Quick Questions Answered

Q: How is this different from Siri or Alexa?

A: Those mostly hear you. Multimodal AI also sees and understands pictures, videos, and more.

Q: Can I try this without coding?

A: Yes! Many tools like Google Lens or ChatGPT with images need no programming.

Q: Will this replace human jobs?

A: It’s more about helping people work better, like giving doctors extra eyes to analyze scans.

Q: Is it expensive?

A: Many basic tools are free to try. Costs go up for advanced business uses.

Q: What’s the easiest way to start?

A: Play with free image+text tools online to see how they work.

Multimodal AI: Getting Started Is Easy

You don’t need to be a tech expert to use this. Here’s how to dip your toes in:

- Firstly, try taking a picture with Google Lens and see how it understands it

- Secondly, experiment with ChatGPT’s image features

- Then, look at how stores use visual search on their websites

Furthermore, the technology is here and ready to use today. Why not give it a try?

Remember These Key Points

It combines different senses, like we do

Many free tools exist to experiment with

Start small and grow as you learn

The future will bring even more amazing uses

The best way to learn? Just start playing with it! What will you create first?